Extended

Abstract

The Zeitgeist

Extreme Programming (XP), it would seem, is in tune with an emerging, emergent intellectual Zeitgeist. This would normally not be a surprising observation. After all, the architects of XP are not unfamiliar with this work. But the case can be made that these architects did not design XP. Indeed, in one sense, to have had the courage of these convictions would have demanded that these designers not design XP. They simply had to remove a few roadblocks, and allow it to unfold in front of them. They didn't as much have to craft it, as make sure they got out of its way. They simply had to observe what worked, and what didn't, and take pieces of what worked, and fit them together. The brilliance was in simply having had the boldness and brashness to do so. This is far from a simple thing.

This Zeitgeist is manifested in a number of burgeoning disciplines. It emphasizes adaptation over prediction, growth over planning, and flexibility over control. From this perspective, order emerges not from rigid master plans and blueprints, but from a fast-paced, feedback driven interaction with the volatile, rapidly changing requirements placed on the system by its environment. It draws from studies of evolution, genetics, self-organization, fractals, complexity, adaptation, systems, and chaos. It gathers insights from the worlds of economics, neural networks, engineering, industrial design, physics, management, patterns, and architecture. It holds that order emerges in a seemingly spontaneous fashion, not from top-down design, but by a highly dynamic, adaptive process of bi-directional reconciliation of forces between parts and wholes. Such processes are driven more by feedback than planning. They harvests hindsight, instead of trying to predict and control the future. When the pace of change overwhelms our ability to predict, the race will go, naturally, to those that are quick on their feet.

That the emergence of XP is itself something of a case study in these sorts of processes at first may strike one as remarkable, but upon reflection, it seems almost inevitable, and utterly in tune with the Zeitgeist.

Teleonomy

Evolution is smarter than you are

--Richard Dawkins

Man Plans, God Laughs

--European Proverb

Teleonomy is the science of adaptation. It is "the quality of apparent purposefulness in living organisms that derives from their evolutionary adaptation". The term was coined to stand in contrast with teleology. A teleological process is one that is planned in a purposeful way by a sentient, intelligent being. Artifacts that emerge from such a process are the products of foresight, and intent. A teleonomic process, such as evolution, produces products of stunning intricacy without the benefit of such a guiding intelligence. Instead, it blindly accrues information about what has worked, exploiting feedback from the environment via the selection and survival of fitter coalitions of such insight. It unwittingly choreographs a grand audition of a horde of variations on what it has learned thus far, culling the also-rans, and casting the winners in its next production. It hoards hindsight, and uses it to make "predictions" about how to cope with the future.

It does this by spawning subtle variations, one step at a time. The steps are small, because, as Dawkins has observed, there are a lot more ways of being dead than being alive.

It may seem odd to cast a process for building programs, which are, after all, designed artifacts produced by human beings, as have a teleonomic character. But a case can be made that as complexity increases, and change accelerates, programmers are increasingly in the position where their predictions are rendered moot, and their ability to adapt rapidly becomes primary. When this is the case, the lessons we can learn from teleonomic process, such as the role of feedback, the power of spawning and testing variations, the importance of small steps, and vitality, and the importance of artifacts which embody our memory of what has worked before, become more valuable and relevant.

Teleonomic processes, it would seem, are capable of relentlessly converging on designs that are arguably beyond anything built by the hand of man. Like market economies, they can adapt in ways that master planned systems simply cannot. Often, they are the only means by which complex entities can be grown.

Indeed, it is possible to the cast bureaucratic waterfall approaches of the last century as the software engineering equivalent of scientific creationism.

Teleology gives way to Teleonomy when no single individual's intent is the controlling factor any more. Is this chaotic? Perhaps. The insight with chaos is that there are multiple determining factors lost in a complex soup that interact in ways that are effectively unpredictable. There may be some underlying determinism, but it becomes next to impossible to discern it without taking the cover off the box. Past a certain point, even deterministic artifacts like programs become so complex in their behavior that we stop thinking of them like Newtonian mechanisms and start treating them as black-box beings that exhibit behavior. Is computer science becoming a behavioral science?

Feedback and

Adaptation

XP eschews the top-down analysis and design that is the hallmark of traditional software engineering approaches. It embraces instead a regimen of short iterations, with client feedback pervading the lifecycle. The short iterations assure that even when things start to drift off course, expectations and reality are realigned soon enough.

Dependence on feedback rather than planning is a hallmark of teleonomic systems. They use this feedback to decide what to cull, and where to grow. XP too, uses client feedback to adjust project scope and priorities, and hence to cull and grow as well. The short iterations XP emphasizes play somewhat the same role as fixed lifespans in the wild. They ensure that failing experiments don't crowd out the possibility of success down the road, and that success is quickly reinforced. As disquieting as mortality may be for us as individuals, it ensures that there is room for the next generation to flourish. Great timbers must fall for saplings to see the sun.

Small iterations make sure that tensions are resolved before strain accumulates to cataclysmic levels. Foote and Yoder call this the SOFTWARE TECTONICS pattern.

Disseminating

Best Practices

One of the more striking aspects of XP is the requirement that all coding be done in pairs. This seems odd for programmers, who have customarily been solitary, nocturnal beasts, but would come as no surprise at all to commercial aviators, or surgeons.

A pure pair programming approach requires that every line of code written be added to the system with two programmers present. One types, or "drives", while the other "rides shotgun" and looks on. In contrast to traditional solitary software production practices, pair programming subjects code to immediate scrutiny, and provides a means by which knowledge about the system is rapidly disseminated.

Indeed, reviews and pair programming provide programmers with something their work would not otherwise have: an audience. Sunlight, it is said is a powerful disinfectant. Pair-practices add an element of performance to programming. An immediate audience of one's peers provides immediate incentives to programmers to keep their code clear and comprehensible, as well as functional.

An additional benefit of pairing is that accumulated wisdom and best practices can be rapidly disseminated throughout an organization through successive pairings. This is, incidentally, the same benefit that sexual reproduction brought to the genome. Sexual reproduction is how elements of the genome communicate. Fit practices become more plentiful, and supplant those that are less successful.

By contrast, if no one ever looks at code, everyone is free to think they are better than average at producing it. Programmers will, instead, respond to those relatively perverse incentives that do exist. Line of code metrics, design documents, and other indirect measurements of progress and quality can become central concerns.

The Scullers

of

Richard Dawkins gives a striking example, in the Selfish Gene, of how an agent, in his case, a sculling coach, might select for desirable traits, without knowing exactly what they were, merely by conspiring to randomly mix team members, and tracking the results. For instance, if it were beneficial to have three left handers on one side of the boat, and three right handers on the other, he could find, by tracking successive heats, a team that possessed this characteristic, if it mattered, without knowing that was what he was looking for. This is how genetic algorithms work.

The XP practice of treating each iteration as a generation, and of making teams that don't finish their iteration move on to something else, regardless of whether they ask to finish the task, has this same characteristic. Teams that don't work are, in effect broken up. In this way, the same kind of search for whatever chemistry it is that makes a team effective takes place.

A genome scatters phenotypes willy-nilly, as it lurches towards a better accommodation with the ecosphere in which it resides. It doesn’t care a whit about the fate of individual phenotypes or individual organisms. It cares only about dispersing teams of genes that better fit the challenges it faces as widely about the population as it can. Of course, the individual cares deeply/intensely about this. Fortunately, programmers rarely find their lives in physical peril when they miss an iteration objective. Instead, their current pairing and task assignment perishes in their stead, while the programmers endure, to code another day.

Freedom from

Choice

Stewart Brand, in How Buildings Learn, observed that paradoxically, the constraints imposed by, for instance, rows of columns that partition a building into fixed-sized stalls, could foster architectural inventiveness, by limiting the opportunity for flights of fancy and excess, and focusing architects on more utilitarian concerns.

Don Roberts has observed that with XP, the requirement that you build test cases first produces different kinds of designs than in normal systems, because building the unit test jigs first forces you to build modular, stand-alone, unit testable objects up front.

This practice can be seen as one that limits coupling, and keeps it limited. It can force the kinds of healthy part/whole relationships that Kaufmann discusses in his work on N-K networks.

A Fractal

Model

The fractal model is yet another of a long series of iterative, evolutionary software development perspectives that have been discussed over the last fifteen years or so. It holds that objects evolve via a three phase process of prototyping, exploration, and consolidation.

During the prototype phase, you simply get something on the air. You may engage in expedient code borrowing, or throw-away design practices. It is the object's way of learning its way around the design space. You posit an initial point in the design space, and see where opportunity leads you.

During exploration, changing requirements buffet the object, and cause it to adapt. This can frequently undermine its structure, since pat assumptions about the future may be overtaken by reality. The entropy of the system can be increased as such changes are made.

During consolidation, the system is refactored in order to exploit opportunities for greater generality and power. This is where entropy reduction phase. Frequently, the system will shrink, as commonalties are exploited, and sloppy code is excised. Most importantly, such consolidation will often lead to the emergence of distinct, black box components, as the identities of these reusable entities come to reflect the distinct concerns identified in the problem space.

One of the things that distinguishes this model is that objects evolve at all levels of a system, within and beyond the applications that spawn them. Programmers may move from phase to phase in seconds, hours, days or weeks. The evolution of more coarse grained elements of the system proceeds in a self-similar fashion, perhaps at a slower time scale. Indeed, this self-similarity is what gives this notion its name.

As a family of applications evolves, frameworks emerge too. Framework building is an emergent process. While the jury is out, we believe that XP will inevitably promote the emergence of domain specific reusable artifacts. All the precursors and preconditions are there to cull these commonalities from the mire. It seems inevitable, in fact. It may be that XP has set the stage for code too cheap to meter, and that few reusable artifacts will emerge from such projects. We think it more likely that as more XP teams and projects mature, frameworks and components will flower and ripen too.

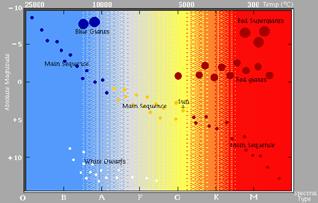

The

emergence of components is oddly reminiscent of stellar evolution. We are reminded of Hertzsprung-Russell diagrams. Not all stars make their way all the way down

the main sequence. Not all code merits

the attention to justify its emergence as a distinct component. Those artifacts that do, by virtue of proven,

post-hoc utility, can justify the cost of lavishing the resources on them to

make them full-blown components.

The

emergence of components is oddly reminiscent of stellar evolution. We are reminded of Hertzsprung-Russell diagrams. Not all stars make their way all the way down

the main sequence. Not all code merits

the attention to justify its emergence as a distinct component. Those artifacts that do, by virtue of proven,

post-hoc utility, can justify the cost of lavishing the resources on them to

make them full-blown components.

Experience resides in the artifacts, and in the minds of the artificers who craft these artifacts. You can't have one with out the other. They evolve together. They co-evolve.

Of course, Conway's Law dictates that the structure of an organization mirrors that of the artifacts it produces. If this is so, and objects can evolve independently, and can break free of the applications that spawn them, then we must be prepared to deploy resources correspondingly.

Team members, and even entire teams, must be allowed to follow the grain of these spaces when such opportunities emerge. For corporations, that may mean that these teams are no longer even corporate. They must be free to spin away from the organizations that incubated them too. These teams may be long lived, or quite ephemeral. They may be focused on emerging artifacts, and not the projects that spawned them. They must be allowed to match available talent to the task at hand. They must be allowed to follow opportunities as they unfold before them, or these opportunities will be lost.

We may need to change the way teams are recruited and deployed so that they can focus on particular problems that may cross departmental, and even corporate boundaries. They will exhibit multiple identities and affiliations, to themselves, to their corporations, to their ad-hoc teams, as well as to their brainchildren, that will help to erode artificial boundaries that often prove to be obstacles to collaboration.

This may mean that successful artifacts spin their designers and caretakers out of our large organizations and into autonomous boutiques. We need not fear this fragmentation. We need to encourage it.

We must be prepared to deploy talent differently that we have in the past. If consolidation opportunities really do come late in the lifecycle, during what has customarily been called the maintenance phase, they current programmer deployment practices are completely backwards, because it is during this phase that our best design talent may be most valuable.

We need to cope with how pools of talent are managed. Should be have tiger teams or pools or ready talent, ready to be deployed? How do we decide who becomes a generalist, and who specializes? Do we let them chose?

Tools

Scientific historians have observed that you can often predict progress as much by watching the evolution of tools and instruments as by looking at ideas and theories. That is to say, once the tools set the stage for progress, this progress quickly follows. A case can be made that programming languages and tools played a pivotal role in the emergence of XP. The browsers and program management tools present in the Smalltalk community allow programmers to cultivate code more nimbly that do their more cumbersome cousins. Power tools like the Refactoring Browser and SmallLint permit changes to code to be made quickly and safely in seconds, where minutes, or even hours, of tedious modifications would otherwise have been needed.

To cope with rapid change, code must be able to change rapidly. Good refactoring tools allow more fine-grained changes to take place, and thereby make it easy to be fast on your feet.

Of course, programmers must not only cultivate code, they must also collaborate. Program management tools like the Envy Manager make coping with versions, releases, configurations, and multiple applications easier. Tools to better assist pairing, or remote collaboration in space or time, can be envisioned. Armed with such tools, our confidence that we can cope with the uncertainty that rapid change brings with it is enhanced.

Tools are needed as well to help clients, stakeholders, managers, and purse string holders see what is happening too. Like those little windows in the fence at construction sites, such tools will help assure them that all is going well. It is customary to think of such people as potentially meddlesome, but feedback to and from them may well have just the opposite effect. They have reasons to want to know; they aren't just pains in the ass.

Acknowledgments

It seems only fitting, given the topic of this essay, to acknowledge that these ideas gestated in a community of gifted minds before emerging in a form such as this through ours. Conversations with architects of this community, and sundry fellow travelers such as Kent Beck, Ward Cunningham, Ron Jeffries, Ralph Johnson, Richard Gabriel, Ron Goldman, Don Roberts, Joseph Yoder, and John Brant shaped and refined our own thinking about these issues. It is a privilege to be a party to such collaboration.